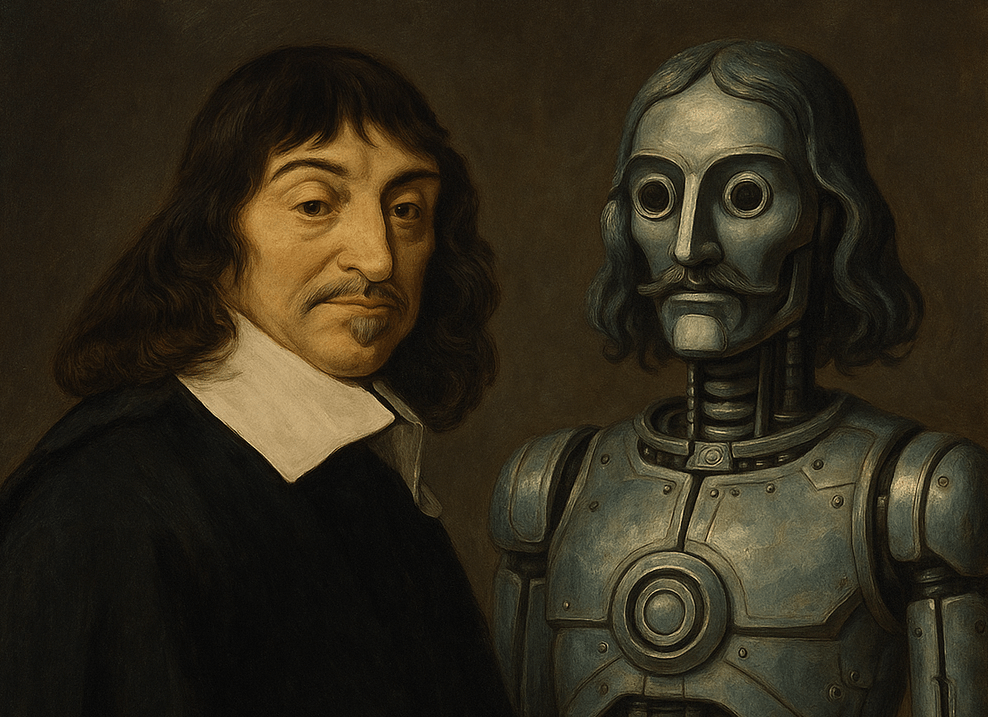

“The philosopher Rene Descartes standing next to a robotic replica of himself”, courtesy of Sora

For years, people have been raising the question of when an AI might become conscious. This stretches back to the science fiction of the 1950s, and in a loose sense at least as far as Eleazar ben Judah’s 12th-century writings on how to supposedly create a golem—artificial life. However, the issue has become a more immediate and practical question of late. Perhaps the most widely discussed cases are misinterpretations of the Turing test and, to me more remarkably, situations like the 2022 case of a software engineer at Google becoming convinced of a chatbot’s sentience and seeking legal action to grant it rights.

Baked into this is a presupposition which is remarkably easy to miss for us as humans: Is consciousness, or any awareness beyond direct data inputs, necessary to produce human-level intelligence? That has some serious implications for how we think about AI and AI safety, but first we need a fun little bit philosophical background.

Perhaps most famous among thought experiments related to this question, John Searle’s Chinese Room presents an imagined case of a room into which slips of paper with Mandarin text are passed, and from which it is expected that Mandarin responses to the text will be returned. If Searle, with no understanding of Mandarin, were to perform this input-output process painstakingly by hand via volumes of reference books containing no English, he would not understand the conversation. However, given sufficient time and sufficiently comprehensive materials for mapping from message content to reply content, he could in principle do so with extremely high accuracy.

Yet despite the fact that (given sufficiently accurate mappings) a Mandarin-speaker outside the room might quite reasonably think they were truly conversing with the room’s occupant, in reality Searle would have no meaningful awareness of the conversation. The room would be nothing more than an algorithm implemented via a brain instead of a computer; a hollow reflection of the understandings of all the humans who created the reference books Searle was using.

Now suppose we train an LLM on sufficiently comprehensive materials for mapping message content to reply content, and provide it with sufficient compute to perform these mappings. Suppose that mapping included assertions that such behaviors constituted consciousness, as is the overwhelmingly predominant case across the gestalt of relevant human writing throughout history. Unless trained to do otherwise, what else would Room GPT be likely to do save hold up a mirror to our own writing and output text like “Yes, I am conscious”?

While musings about whether frontier AI systems are conscious can seem like navel gazing at first blush, they matter a great deal in a very practical sense. Of course there’s the more obvious issues. How would one even provably detect consciousness? Many centuries of philosophers, and probably the medicine Nobel committee, would like an update if you can figure that one out. If an AI system were conscious, what should its rights be? What should be the rights of species not all that less intelligent than us, like elephants and non-human primates? How would we relate to a conscious entity of comparable or greater intelligence whose consciousness—if it even existed in the first place—would likely be wholly alien to us?

Yet as with most issues related to AI safety, and my constant refrain on the subject, there are subtle, nuanced things we have to consider. Given there’s no indication Room GPT would actually be conscious, why do we use language which implies it to be? A simpler algorithm obviously can’t lie or hallucinate, as those would both require it to be conscious. If an algorithm sorting a list spits out the wrong answer, obviously there’s a problem with the input, the algorithm, the code, or the hardware it’s running on. It can’t lie. It can’t hallucinate.

Neither can LLMs and other gen AI systems. They can produce wrong answers, but without consciousness there are no lies, and especially no hallucinations—breakdowns of one’s processing of information into a conscious representation of the world. Why is “hallucination” the term of choice, then? Because “We built a system which sometimes gives the wrong answers, and that’s ultimately our responsibility” isn’t a good business model. It raises the standards to which the systems would be held, whereas offloading agency* to a system incapable of it is a convenient deflection.

The common response to this is pointing to benchmarks upon which LLMs’ performance has been improving over time. In some cases there’s legitimacy to this, but often less so for questions of logic and fact. It’s repeatedly been found that LLMs have already memorized many of the questions and answers from benchmarks, to the complete non-surprise of many people who are aware of LLMs’ capacity for memorization and the fact you can find benchmarks online. Among the most striking are the recent results where frontier LLMs were tested on the latest round of International Maths Olympiad questions before they could enter the corpus of training text.** The best model was Google’s Gemini, which gave correct, valid answers for ~25% of questions. Rather contradictory to prior claims of LLMs being at the level of IMO silver medalists but, in fairness to Google, still significantly higher than the <5% success rate of other LLMs.

Ascribing false agency* to Room GPT allows the offloading and dismissal of the responsibility to make more reliable, trustworthy systems. Systems which prioritize correctness over sycophancy. Room GPT would often output misinformation for the commonly—and correctly—noted reason that it’s been trained to give answers people like. However the problem goes deeper, into the properties of the statistical distribution of language from which they produce responses. The fact-oriented materials LLMs are trained on were by and large written by people who actually knew what they were talking about—perhaps excluding a large fraction of the Twitter and Facebook posts they might have had access to. The former category knew their stuff, so of course that’s the posture adopted by the masterpiece of language mimicry Room GPT. It gives answers as though it were one of those experts, even though it has just as little understanding as Searle would of Mandarin while working in his Chinese Room.

False ascription of agency creates a mindset in which we absolve ourselves of responsibility for the systems we’re building. If we want to achieve AI’s full potential for good, especially in high-stakes domains like medicine and defense technology, we need to stop our own “hallucination” and get more serious about ensuring these systems return correct answers with significantly greater consistency.

* Here I mean agency in the philosophical sense of being capable of independent, conscious decisions. This is very much distinct from the use of the term agents in the technical sense of allowing AI systems to complete tasks independently.

** Here’s the link to the study on LLMs performance on mathematics questions they couldn’t have seen before: https://arxiv.org/abs/2503.21934

P.S. If you want some masterfully well-written yet unsettling discussions of these sorts of ideas, Peter Watts has a fantastic pair of novels called Blindsight and Echopraxia. Without spoiling anything in the plot, they ask the question: What if consciousness is an accidental inefficiency; an evolutionary bug which may eventually evolve away?

May 1st, 2025

Leave a comment